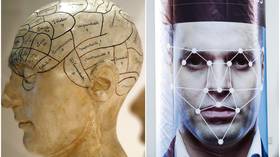

Phrenology is back, wrapped up with facial recognition in a 21st century pre-crime package by university researchers. Too soon?

Helen Buyniski

is an American journalist and political commentator at RT. Follow her on Twitter @velocirapture23

15 May, 2020 22:09

The focus of US policing is shifting from enforcement to prevention as mass incarceration falls out of favor. ‘Pre-crime’ detection is the hot new thing, accomplished through analysis of behavior and…facial features?

Researchers at the University of Harrisburg announced earlier this week that they had developed AI software capable of predicting - with 80 percent accuracy! - whether a person is a criminal just by looking at their face.

“Our next step is finding strategic partners to advance this mission,” the press release stated, hinting that a New York Police Department veteran was working alongside two professors and a PhD candidate on the project.

That statement had been pulled by Thursday after controversy erupted over what critics slammed as an attempt to rehabilitate phrenology, eugenics, and other racist pseudosciences for the modern surveillance state. But amid the repulsion was an undeniable fascination - fellow facial recognition researcher Michael Petrov of EyeLock observed that he’d “never seen a study more audaciously wrong and still thought provoking than this.”

Purporting to determine a person’s criminal tendencies by examining their facial features implies evildoers are essentially “born that way” and incapable of rehabilitation, which flies in the face of modern criminological theory (and little details like “free will”). While the approach was all the rage in the late 19th and early 20th centuries, when it was used to justify eugenics and other forms of scientific racism, it was relegated to the dustbin of history post-World War II.

Until now, apparently. Phrenology and physiognomy - the “sciences” of determining personality by examining the size and shape of the head and face, respectively - are apparently enjoying a comeback. A January study published in the Journal of Big Data made similar criminological claims about its AI “deep learning models,” boasting one program demonstrated a shocking 97 percent accuracy in using “shape of the face, eyebrows, top of the eye, pupils, nostrils and lips” in order to ferret out criminals.

The researchers behind that paper actually named “Lombroso’s research” as their inspiration, referring to Cesare Lombroso, the “father of modern criminology” who believed criminality was inherited and diagnosable by examining physical - specifically facial - characteristics. Nor were they the first to turn AI algorithms loose on identifying “criminal” characteristics - their paper cites a previous effort from 2016, which apparently triggered a media firestorm of its own.

It might be too soon for the public to embrace discredited racist pseudoscience repackaged as futuristic policing tools, but given US law enforcement’s eager adoption of “pre-crime,” it’s not unimaginable that this tech might find its way into their hands.

US authorities have never been more determined to save would-be offenders from themselves, rolling out two pre-crime surveillance programs in the past year alone. The Disruption and Early Engagement Program (DEEP) purports to intervene with “court ordered mental health treatment” and electronic monitoring against individuals anticipated to be “mobilizing toward violence” based on their private communications and social media activity, while the Health Advanced Research Projects Agency (HARPA)’s flagship “Safe Home” project, uses “artificial intelligence and machine learning” to analyze data scraped from personal electronic devices (smartphones, Alexas, FitBits) and provided by healthcare professionals (!) to identify the potential for “neuropsychiatric violence.” To maximize their effectiveness, Attorney General William Barr has called for Congress to do away with encryption.

The risks of pre-crime policing are enormous. Algorithmically-selected “pre-criminals” are very likely to be set up to commit crimes in order to “prove” the programs work, as has happened with the US’ sprawling “anti-terrorism” initiatives. A 2014 investigation found the FBI had entrapped nearly every “terrorism suspect” it had prosecuted since 9/11, and that pattern has continued into the present.

Meanwhile, facial recognition algorithms are up to 100 times more likely to misidentify black and Asian men than white, and the misidentification rate for Native Americans is even higher, according to a NIST study.

The Harrisburg University researchers attempt to push such concerns aside, insisting their software has “no racial bias” - everyone is phrenologically analyzed on an equally pseudoscientific basis. Surely we can trust an NYPD officer to avoid racism. It’s not like 98 percent of those arrested for violating social distancing in Brooklyn in the last two months were black, or anything - it was 97.5 percent.

Given the frenzy of police-state wish-fulfillment - from babysitter-drones to endless lockdowns - that has accompanied the Covid-19 pandemic, these researchers probably thought they could slip in a sleek modernized version of century-old pseudoscience. Totally understandable!

Still too soon? Wait a few years…

Source: RT

~~~~~~~~~~~~~~~~~~~~~~~~~~~

The Digger’s Purpose and Standards

This site does not have a particular political position. We

welcome articles from various points of view, and civil debate when differences

arise.

Contributions of articles from posters are always welcome.

Unless a contribution is really beyond the pale, we do not edit what goes up as

topics for discussion. If you would like to contribute an article, let one of

the moderators know. Likewise if you would like to become an official

contributor so you can put up articles yourself, but for that we need to

exchange email addresses and we need a Google email address from you.

Contributions can be anything, including fiction, poems,

cartoons, or songs. They can be your own writing or someone else’s writing

which has yet to be published.

We understand that tempers flare during heated

conversations, and we're willing to overlook the occasional name-calling in

that situation, although we do not encourage it. We also understand that some people enjoy pushing

buttons and that cussing them out may be an understandable response, although

we do not encourage that either. What we

will not tolerate is a pattern of harassment and/or lies about other posters.

The URL for the site via Disqus is https://disqus.com/home/forum/the-digger/https://disqus.com/home/forum/the-digger/

The URL for the blogspot is http://newlevellers.blogspot.comhttp://newlevellers.blogspot.com

Comments

Post a Comment